Multiclass Classification

Contents

Multiclass Classification#

A multi-class classification problem can be split into multiple binary classification datasets and be trained as a binary classification model each. Such approach is a heuristic method, that is to say not optimal nor direct. But it eventually does the job.

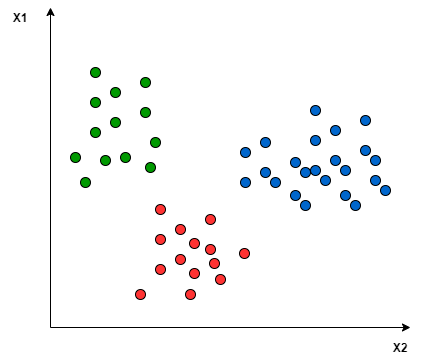

Let’s for instance consider three classes, labelled with their colours and distributed in two dimensions (two input features) like this:

Fig. 12 : 2D distribution of three different classes.

Image: analyticsvidhya.com#

There are two main approaches of such methods for multiclass classification.

One-to-One approach#

Definition 21

The One-to-One approach consists of applying a binary classification for each pair of classes, ignoring the other classes.

With a dataset made of \(N^\text{class}\) classes, the number of models to train, \(N^\text{model}\) is given by

Each model predicts one class label. The final decision is the class label receiving the most votes, i.e. being predicted most of the time.

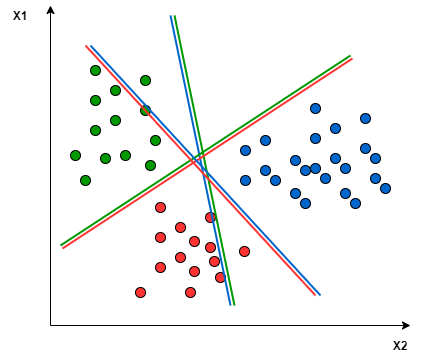

The One-to-One method would create those hyperplanes (with two input features, D = 2 we will have a 1D line as separation):

Fig. 13 : One-to-One approach splits paired datasets, ignoring the points of the other classes.

Image: analyticsvidhya.com#

Pro

The sample size is more balanced between the two chosen classes than if datasets were split with one class against all others.

Con

The pairing makes the number of models to train large and thus computer intensive.

One-to-All approach#

Definition 22

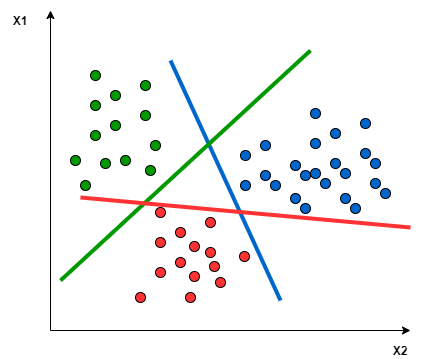

The One-to-All or One-to-Rest approach consists of training each class against the collection of all other classes.

With a dataset made of \(N^\text{class}\) classes, the number of pairs to train is

The final prediction is given by the highest value of the hypothesis function \(h^{k}_\theta(x)\), \(k \in [1, N^\text{model}]\) among the \(N^\text{model}\) binary classifiers.

Fig. 14 : One-to-All approach focuses on one class to discriminate from all other points

(i.e. all other classes are merged into a single ‘background’ class).

Image: analyticsvidhya.com#

Pro

Less binary classifiers to train.

Con

The number of data points from the positive/signal class will be very small if the negative/background class is the merging of all other data points from the other classes. The model may fail to learn the patterns that identify the rare positive class because it is penalized so little for misclassifying it.

Further reading#

Some further reading if you are curious:

Learn more

Exercise

The scatter plots above show the data points in the feature space with the decision boundaries.

Where is the sigmoid function?

Try to find a visual making the sigmoid function more visible.

Hint

Perhaps a 3D plot can help you here…